AI insights

-

How can AI be used in healthcare when traditional methods fail?

AI, such as ChatGPT, can be used as a supplementary tool for diagnostics and treatment planning when traditional medical solutions fall short. It offers potential treatment plans and insights by analyzing comprehensive medical data.

-

What are the ethical considerations when using AI in healthcare?

Privacy and data security are crucial ethical considerations when using AI in healthcare. Ensuring that patient data is protected and used responsibly is essential for maintaining trust and compliance with regulations.

-

How has AI impacted the field of UX design?

AI has helped reduce delivery time in UX design by about 60%, from an estimated 240 hours to around 105, without changing headcount or quality. It is used to gather and cluster information before client meetings, streamlining the process.

Sources: AI + UX: A Few Notes from the Field -

What are some common reasons for AI failures?

AI failures often stem from data degradation, limitations in model training, and unrealistic user expectations. These issues lead to errors and declining reliability of AI tools.

-

How can AI tools be optimized to avoid common pitfalls?

To optimize AI tools and avoid common pitfalls, users should focus on maintaining high-quality data and setting realistic expectations. Understanding the limitations of AI's statistical nature is also crucial for effective use.

-

What role does data play in the success of AI in healthcare?

Success with AI in healthcare requires organizing comprehensive medical data for effective analysis and tailored recommendations. Proper data utilization is key to leveraging AI's full potential in diagnostics and treatment planning.

-

How has AI changed the approach to client meetings in UX design?

AI is used to gather and cluster sources of information before client meetings, allowing teams to learn as much as possible about the client’s history, products, and leadership. This preparation helps streamline the process and improve efficiency.

Sources: AI + UX: A Few Notes from the Field

- Imagine waking up in pain, visiting countless doctors, and still hearing, "We don't know what's wrong." That's where one person found themselves, until they turned to AI for answers.

- This article dives into a personal journey using ChatGPT as a healthcare ally when traditional medicine hit a dead end.

- It explores how AI can offer insights and treatment plans, emphasizing the need for privacy and professional medical advice.

- While AI isn't a substitute for doctors, it can be a powerful tool in diagnostics and treatment planning.

- The story highlights AI's potential to transform healthcare, urging a balanced approach where technology complements human expertise.

This article shares a personal story of leveraging AI, specifically ChatGPT, in healthcare when traditional medical solutions fell short. It highlights the potential of AI as a supplementary tool for diagnostics and treatment planning, while emphasizing the importance of privacy, critical evaluation, and professional medical advice.

- AI in Healthcare: The article explores how AI tools like ChatGPT can offer potential treatment plans and insights when conventional medicine hits a dead end.

- Data Utilization: Success with AI requires organizing comprehensive medical data for effective analysis and tailored recommendations.

- Ethical Considerations: Privacy and data security are crucial when using AI in healthcare.

- Human-AI Collaboration: AI is portrayed as a powerful assistant that complements, not replaces, medical professionals.

- Future of Healthcare: The narrative underscores the evolving role of AI, suggesting a balanced approach where technology empowers both patients and doctors.

Sometimes, life throws you a curveball that no one—not even the experts—sees coming. Earlier this year, I found myself on the receiving end of one such curveball.

I was waking up in excruciating pain, making repeated trips to the ER, and consulting with multiple doctors. After a series of medical tests and consultations, I was left with something no one ever wants to hear: “We don’t know what’s going on with you.”

Frustrated, confused, and still in pain, I was at a complete loss. Traditional medicine had hit a wall, and I was left with more questions than answers. But instead of resigning myself to the uncertainty, I decided to try something that, even a year ago, would’ve seemed like a wild idea—I turned to AI for help.

When the Doctors Can’t Help, Turn to the Machines

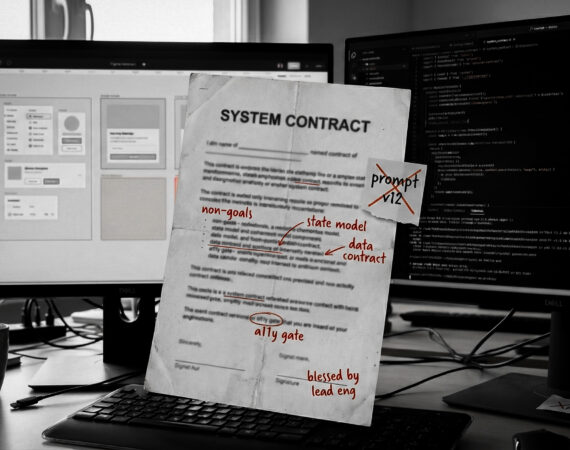

You’ve probably heard of ChatGPT, right? At the time, I was using it for everything—from writing emails and documentation to analysing research data and brainstorming ideas. But it turns out this AI has a few more tricks up its sleeve. Desperate for answers, I uploaded all my test results and medical data into ChatGPT, hoping it could shed some light on my situation.

A few moments later, the AI created a treatment plan. I was sceptical, but at this point, it was my last hope. This wasn’t a doctor with years of experience under their belt and a medical license—it was a machine.

But it was a machine that had processed more medical data than any human ever could. So, I decided to give it a shot. The plan didn’t involve surgery, pills, or therapy. Instead, it was a strict set of rules, diet, meal plans, and schedules. It wasn’t easy, but it was better than hearing, “We don’t know.”

Here’s the wild part: it worked. Today, I’m pain-free, feeling better than ever, and still wrapping my head around how it all happened.

Bridging the Gap: From My Experience to the Strawberry Model

This is the final warning for those considering careers as physicians: AI is becoming so advanced that the demand for human doctors will significantly decrease, especially in roles involving standard diagnostics and routine treatments, which will be increasingly replaced by AI.… pic.twitter.com/VJqE6rvkG0

— Derya Unutmaz, MD (@DeryaTR_) September 13, 2024

Lately, there’s been a lot of talk about OpenAI’s new “Strawberry” model (or o1-preview)—a cutting-edge AI outperforming every other in medical diagnostics. With an 80% accuracy rate on the AgentClinic-MedQA dataset, compared to GPT-4o’s ~50%. But my story? It happened before Strawberry even hit the scene.

If an earlier version of AI could do this for me, what does that say about the future of healthcare? It’s not just about technology getting smarter; it’s about what that means for us—patients who are tired of feeling lost in the system.

But before you start feeding your medical history into an AI tool, let’s talk about something crucial—privacy.

The Ethical and Privacy Considerations of Using AI for Healthcare

Sharing personal medical information with AI platforms can be a double-edged sword. While AI can analyse data at an unprecedented scale, you need to be mindful of where and how you share your information. Always ensure that the platform you’re using follows stringent data privacy standards, encrypts your information, and doesn’t store your data without consent.

System thinking is key here—understanding the bigger picture of how your data flows and how it might be used. You’re not just a patient; you’re part of a larger system where data is a valuable asset. Protect it.

Remember, while AI can offer insights, it’s not a replacement for professional medical advice. It’s a tool, not a licensed healthcare provider. Any treatment plan suggested by an AI should be critically evaluated and discussed with your doctor before you take any action.

I will add a Medical Disclaimer: The advice provided by AI, including ChatGPT, is not a substitute for professional medical consultation. Always seek the guidance of your healthcare provider with any questions you may have regarding a medical condition.

Step-by-Step: How to Leverage AI in Your Healthcare Journey

Here’s how you can use AI to take control of your health, just like I did:

1. Start with Your Data

Doctors are trained to look for patterns, but they’re still human. They can only process so much information at once. AI, on the other hand, thrives on data—every bit of it. So, gather everything you’ve got: lab results, imaging reports, medical history, and even that scribbled note your doctor made at your last visit.

Organize it. Make sure it’s clear and ready to be analysed. The more precise you are with your data, the better the results you’ll get.

2. Ask the Right Questions

Rather than asking a broad question like, “What’s wrong with me?”, I opted for a more strategic approach. I framed specific, targeted questions such as, “Given these test results and symptoms, what are the potential diagnoses?”, “What lifestyle changes could help improve my condition?”, “Are there any dietary adjustments or exercises you would recommend?”, and “What are the recommended next steps?”

When engaging with AI, think of it as conversing with a highly knowledgeable and patient friend who can sift through medical data at lightning speed. Avoid vague questions and assumptions. Instead, be clear and direct with your instructions, and encourage the AI to ask follow-up questions if necessary. This interactive exchange allows the AI to provide more accurate and relevant responses tailored to your situation.

3. Evaluate the Advice Critically

Just because an AI suggests something doesn’t mean you should act on it immediately. Always cross-check its recommendations with reliable sources, consult your healthcare provider, and trust your own judgment. AI can analyse data, but it can’t feel your pain or understand your unique context like a human can.

In my case, ChatGPT offered several potential diagnoses and a comprehensive treatment plan centred around diet, exercise, and lifestyle adjustments. I didn’t take this information at face value. Instead, I used analytical reasoning to verify each suggestion against trusted medical sources.

By being specific with my questions and cautious with the advice, I turned ChatGPT into a valuable tool, not a standalone solution. I took the AI-generated treatment plan, reviewed it with a doctor, and together we refined it to better fit my needs.

This isn’t about replacing your doctor—it’s about equipping them with a super-powered assistant.

4. Implement Gradually and Monitor Closely

If you decide to follow an AI-generated plan, do it carefully. Implement changes step-by-step and monitor your condition closely. Keep notes, track your progress, and don’t hesitate to make adjustments. AI might be able to analyse data, but you’re the one living in your body—you know it best.

5. Keep an Open Mind

AI isn’t the be-all and end-all of healthcare. There’s still a lot it can’t do. But it’s a tool—a very powerful one—that can help bridge the gaps in traditional medicine.

Keep an open mind. What worked for me might not work for you, but if you’re stuck in the healthcare maze, why not give it a shot?

Where Do We Go from Here?

AI models like Strawberry are set to change how we think about healthcare, but it’s not about replacing doctors. It’s about empowering us—patients, doctors, and everyone else—to make better, more informed decisions. That’s where critical thinking comes into play—understanding the limits of what AI can do and using it to complement human judgment, not replace it.

My experience was a wake-up call. It showed me that the future of healthcare is already here, and it’s moving fast. Maybe faster than we’re ready for. But whether we’re ready or not, the train’s leaving the station. So, let’s get on board, ask the right questions, and use these tools to take back control of our health.

What do you think? Is AI the future of healthcare, or are we still better off with a human touch?

I’d love to hear your thoughts. After all, we’re all in this together—humans and machines alike.