AI insights

-

Why do AI tools often fail despite their initial promise?

AI tools frequently fail due to data degradation, limitations in model training, and unrealistic user expectations. These factors contribute to a decline in AI's reliability, leading to more frequent errors.

-

What is 'model collapse' in AI?

Model collapse occurs when AI models rely on low-quality, AI-generated data, which leads to an increase in errors. This is a significant issue as it affects the reliability of AI systems.

-

How does AI impact learning in high school settings?

AI, specifically GPT-4, can both aid and harm learning in high school settings, as shown in a study conducted in a Turkish high school. The study found that AI tutors covered a portion of the math curriculum, but the impact on learning varied.

-

What are the ethical considerations when using AI in healthcare?

When using AI in healthcare, it is crucial to consider privacy and data security. These ethical considerations ensure that patient information is protected while leveraging AI for diagnostics and treatment planning.

-

What potential does AGI hold for UX design?

AGI, expected to arrive by 2027, promises to revolutionize UX design by understanding, learning, and adapting like a human. This transformation will create new opportunities and responsibilities for UX designers.

-

How can users mitigate the issues of AI's declining reliability?

Users can mitigate AI's declining reliability by optimizing AI use and avoiding common pitfalls. Practical steps include ensuring high-quality data and setting realistic expectations for AI capabilities.

-

What role does data quality play in AI's performance?

Data quality is crucial for AI's performance, as poor data can lead to model collapse and increased errors. Ensuring high-quality data is essential for maintaining AI reliability.

- Hey there!

- If you've been relying on AI tools for your daily tasks, you've probably noticed they're not as reliable as they used to be.

- This article dives into why AI's performance is slipping, pointing to issues like data degradation and the limitations of model training.

- It highlights how AI's reliance on low-quality, AI-generated data is causing "model collapse," leading to bizarre and faulty outputs.

- The piece also offers practical advice for users: train AI effectively, verify its outputs, and use human judgment for critical tasks.

- So, if you're looking to make the most of AI without falling into its traps, this read is for you.

This article explores why AI tools, despite their initial promise, are increasingly prone to errors and what users can do to mitigate these issues. It examines how data degradation, the limitations of model training, and unrealistic user expectations contribute to AI’s declining reliability, while offering practical steps for optimizing AI use without falling into common pitfalls.

- Data Quality Decline: AI models face “model collapse” due to reliance on low-quality, AI-generated data, leading to more frequent errors.

- Limitations in AI Understanding: AI’s statistical nature means it lacks true understanding, relying on pattern matching which becomes unreliable as data quality drops.

- Examples of Failures: Cases like faulty legal contract advice and bizarre AI responses highlight current AI reliability issues.

- Setting Realistic Expectations: Users should train AI effectively, verify its output, and use it cautiously for critical tasks.

- Practical Safeguards: Implementing oversight processes and knowing when human judgment is essential are key to leveraging AI’s potential while mitigating its risks.

If you’re like me, using AI for everything almost 24/7, you’ve probably noticed a shift. When generative AI first came onto the scene, we all knew to be cautious. There was an understanding that you’d have to verify and proofread its output, almost like you were dealing with a rookie. But we were okay with that because the promise of what it could do was incredible. Fast forward to now, and something changed.

AI has become such a valuable part of our workflows that we’ve grown to expect it’ll just work. We lean on it for business-critical tasks, assuming it’s got our back. But here’s the reality check: it doesn’t always work. I’ve found myself spending more time fixing its answers than benefiting from them. That hit home hard when I was preparing a contract for a client.

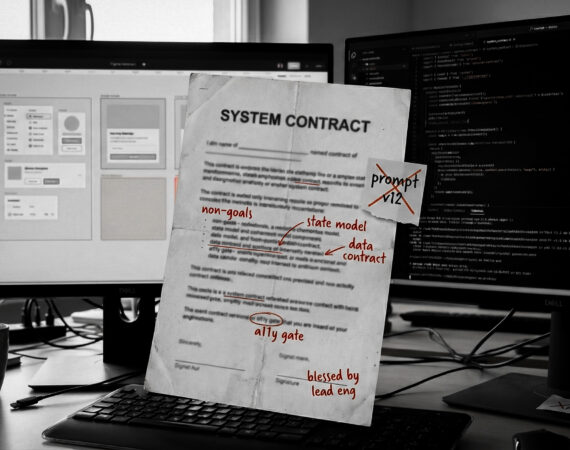

After experiencing these issues firsthand, I started looking into recent studies to see if others were noticing the same patterns—and they were. I came across a study from Epoch AI predicting that by 2026, we could run out of high-quality data to train AI models [source: Epoch AI Study]. As more AI-generated content gets recycled into training models, a process called “model collapse” begins to happen. This term, as highlighted in a Nature study, refers to AI systems progressively losing their ability to generate accurate information over time because they’re training on lower-quality data [source: Nature].

The Contract That Could’ve Cost Me

I thought I had it covered. I was using a Contract Law GPT, trained specifically for legal documents, to review my contract. It should’ve been simple: a quick scan, a few tweaks, and I’d be done. Instead, I got some of the strangest advice I’ve ever seen—answers that no lawyer, or even a half-awake law student, would sign off on. I ended up rewriting most of it manually, wasting almost an hour in the process.

That’s when I realized: AI isn’t as reliable as we’ve let ourselves believe. We’ve gone from cautiously verifying its output to expecting it to be flawless—and that’s where we get into trouble.

Why Is AI Getting Worse?

The problem lies in both the data AI models are being trained on and the limitations of how these models work.

- Data Degradation: Generative AI models like GPT rely on vast datasets scraped from the web, but much of that data is of questionable quality. As more AI-generated content circulates online, it ends up being re-ingested by these models. This feedback loop of using AI-generated data to train AI models is at the heart of the “model collapse” issue, as outlined by Nature. AI is no longer learning from diverse, high-quality human content but instead feeding on its own flawed output, leading to more inaccuracies over time [source: Nature Study].

- Statistical Nature of AI: Large language models (LLMs) like GPT work by predicting the next word in a sequence based on probabilities. These models aren’t “thinking” or “understanding” as humans do—they’re pattern-matching across an enormous dataset. When the training data is rich and varied, these models can perform remarkably well. But as the quality of data degrades, so does the accuracy of the AI’s predictions. This explains why we’re seeing more hallucinations—AI confidently producing incorrect or bizarre answers.

- Training and Cost Limitations: Training AI models are costly, and there’s a balance between training on massive datasets and optimizing for accuracy. To save on resources, some models are undertrained or overtrained, leading to issues like overfitting—where the AI becomes too specialized to the training data, making it less adaptable to new, unseen data. This is another reason why AI’s performance is declining.

The result? Hallucinations. Bizarre facts. And, in my case, contract advice that no one in their right mind would follow.

Comparative Analysis of Leading AI Models

To better understand the current landscape of generative AI, let’s break down the strengths, weaknesses, and challenges of three major AI models: ChatGPT (GPT-4), Google’s Gemini, and Perplexity AI. Below is a comparative analysis, showcasing the use cases and technical limitations of each.

| ChatGPT (GPT-4) | Google’s Gemini | Perplexity AI | |

|---|---|---|---|

| Strengths | Versatile for content generation, customer support, creative writing; large datasets and deep learning architecture. | Efficient in data retrieval and summarization; integrated with Google’s search infrastructure. | Transparent about sources; good for research and information retrieval. |

| Weaknesses | Becoming ‘lazier’ and more prone to hallucinations; suffers from model collapse due to low-quality training data. | Known for generating incorrect or strange suggestions; retrieval-augmented generation model prone to misinformation. | Declining accuracy; no longer reliable with proper citations; suffers from data degradation and low-quality sources. |

| Use Case | Best for creative and low-risk applications but risky for fact-checking and complex tasks. | Good for quick information gathering but requires oversight due to misinformation risks. | Best suited for research-based tasks but showing signs of decline in reliability. |

| Technical Challenges | Suffers from hallucinations; prone to inaccuracies as data quality declines. | Flawed data retrieval leads to misinformation; hallucinations are common. | Accuracy decline due to reliance on poor-quality sources; suffers from data feedback loop issues. |

| Reference | BusinessWeek, Nature, Epoch AI | MIT Technology Review, Nature | BusinessWeek, Epoch AI |

As the table illustrates, each AI model has its strengths and weaknesses, particularly when it comes to data accuracy and reliability. ChatGPT remains a versatile tool for content generation but has suffered from increased hallucinations and declining performance. Google’s Gemini is powerful for quick information retrieval but frequently generates incorrect or absurd answers. Lastly, Perplexity AI is valued for its transparency in sourcing but has recently shown signs of degradation in accuracy.

How We Got Here

If you’ve been using generative AI, you’ve probably seen this firsthand. Early on, it was promising. But GPT-4 users have noticed it’s “lazier” and “dumber” compared to a year ago [source: BusinessWeek]. The tools we used to trust are becoming unreliable, leaving us guessing whether we’ll get useful results.

Even Google’s AI Overviews, rolled out earlier this year, produced answers like telling users to eat gluey pizza or that U.S. President Andrew Johnson earned degrees decades after his death [source: MIT Technology Review]. These aren’t harmless mistakes; when you’re relying on AI for business-critical decisions, they can cost you real money.

Setting Realistic Expectations

Generative AI is like a hyperactive intern—it’ll give you answers fast, but they won’t always be right. The key is learning how to manage it, and that starts with setting realistic expectations. This tech isn’t perfect, but it’s also not useless if you approach it wisely.

So, how do we avoid getting burned by AI? It’s all about having the right processes in place.

Step 1: Train It Well, But Don’t Rely on It Blindly

Investing in AI training is a must. If you don’t put in the effort to refine what it knows, you’ll get sloppy answers. But even with the best training, it’ll still make mistakes. Double-check everything it gives you, and don’t skip the critical thinking part.

Step 2: Build in Safeguards

I can’t stress this enough—AI needs oversight. Every time you use it for something important—whether it’s a legal contract, a UX strategy, or a business proposal—put it through a human filter. You need processes to catch errors before they turn into costly mistakes. Relying on AI without a safety net is a fast track to trouble.

Step 3: Know When to Step In

AI won’t solve every problem, and it’s important to know when it’s time to step in yourself. When it comes to high-stakes situations, there’s no substitute for human judgment. Trust your expertise over the AI’s output, especially when something doesn’t seem right.

Where We Go from Here

We’re still in the early stages of this AI revolution, and while the tech is getting more advanced, the truth is it’s also getting messier. The models we rely on are becoming lazier, and without better oversight, we’re going to see more of those weird, off-the-wall responses that leave us scratching our heads.

But here’s the optimistic part: we can control how we use it. We’re the ones driving the ship. With the right training, processes, and expectations, AI can still be a game-changer. It’s all about knowing when to trust it—and when to do the work yourself.

At the end of the day, leadership is about making smart decisions. AI is a tool, but we’re the ones who need to wield it wisely.

You already know that investing in critical thinking is what separates good leaders from great ones.

How are you managing AI in your workflows?