AI insights

-

What is the main risk the article warns about with AI-driven fluency in leadership?

AI can make a strategy document look executive-ready even when the underlying evidence is thin, causing confidence to outpace understanding and creating drift. The main takeaway is to prevent that drift with a short, clarifying ritual.

Topic focus: Core Claim -

What is the ritual the article recommends to prevent drift, and how long does it take?

The ritual is 15–20 minutes of structured clarity, described as speed insurance to test assumptions before a decision is set. It works whether you author decisions or approve them.

Topic focus: Definition -

Can you give an example of how fluency can mislead in leadership contexts?

An AI-generated strategy document can look executive-ready even when the underlying evidence is thin, which can win support before its assumptions are tested.

Topic focus: Example -

Who should apply this ritual in decision processes?

It works whether you are the author of decisions or the person approving them.

Topic focus: How To -

What is the consequence of not testing assumptions and staying with confident but incomplete understanding?

Confidence can increase faster than understanding, leading to months of unwinding a confident mistake later.

Topic focus: Definition -

How do the related ideas about ideas versus systems expand the article's thinking?

The Spiral Climbs argues that ideas are expensive and systems are cheap; by 2025 systems are everywhere, patterns are cheap, judgment is not, and speed finally has a brain, with design moving into systems, tokens, and code.

Topic focus: How To -

What related guidance from design leadership literature can we consult for broader principles?

The 15 Rules of Design Leadership for 2026 offers fifteen rules to help leaders ship through ambiguity and politics, focusing on inspecting decisions.

Topic focus: Data Point

- AI can turn messy thinking into fluent output, which is handy but risky: a polished plan can win support before it’s tested, and confidence can outrun understanding.

- The fix isn’t less AI, but better calibration.

- The article offers a 15–20 minute Decision Brief—a Feynman‑style ritual that asks you to explain the decision plainly, name three assumptions, attach a confidence tag (60%/80%/95%), and specify the smallest safe test plus the strongest counterargument.

- Let AI be a sparring partner to surface edge cases and design minimal experiments, not to write the case after you’ve decided.

- At scale, calibration separates speed from wisdom.

AI is excellent at turning messy thinking into fluent output. For example, an AI-generated “strategy document” can look executive-ready even when the underlying evidence is thin. That is useful and risky.

In leadership settings, fluency often gets mistaken for completeness. A plan that sounds coherent can win support before its assumptions are tested. Over time, this creates a predictable failure mode: confidence increases faster than understanding.

This post is about preventing that drift.

Think of the ritual below as speed insurance: 15–20 minutes of structured clarity to avoid months of unwinding a confident mistake.

It works whether you author decisions or approve them.

When Process Became the Product

I led a portfolio of cross-functional initiatives in a large, multicultural organization where scale and interdependencies made “quick fixes” deceptively risky.

Our team leader did not have deep context on every detail of the work, and he handled that well. He stayed oriented to outcomes, maintained regular check-ins, and gave me room to execute. The team developed a workable operating rhythm and shared norms. It was not perfect, but it was stable and productive.

A leadership change introduced a new layer of management. After a brief review of projects, delivery, and process, the new leader concluded the function should be rebuilt from scratch.

One specific shift he pushed was governance: he replaced our lightweight intake triage with a centralized approval gate and a heavier weekly reporting cadence. That single change slowed decisions, distorted priorities, and encouraged local teams to route around the process.

That governance change cascaded. The operating rhythm fractured, attrition increased, and delivery degraded. Within two years, the organization transitioned him out of the role.

The point is not intent; it is calibration.

Significant organizational change requires proportionate understanding, evidence, and iteration. A confident narrative can outrun system knowledge, and AI can accelerate that gap by making premature conclusions sound comprehensive, validated, and “ready to execute.”

The core risk: fluency inflates confidence

Confidence is not the enemy. Leaders need enough of it to align teams and commit resources.

The issue is uncalibrated confidence: certainty that is not matched to evidence, context, and feedback. AI can unintentionally increase that mismatch because it produces:

- Clear, persuasive language even when inputs are partial.

- “Best practice” patterns that look universal but ignore local constraints.

- Smooth summaries that hide the cost of what was not examined.

In other words, AI can make work appear finished before it is understood.

What changes at scale: second-order effects inside organizations

When everyone can generate polished narratives quickly, the organizational signal-to-noise ratio shifts.

- Evaluation overload: proposals get cheaper to produce than they are to evaluate. This creates a backlog of high-confidence plans competing for attention.

- Narrative power drift: influence can shift toward people who control the narrative (or the tooling) rather than those who understand the system. Experts spend more time defending nuance against “clean” summaries.

- Velocity without learning: decision speed can rise without a matching increase in learning speed. You get more movement, but not necessarily more accuracy.

- A dissent tax: once a polished narrative circulates, it becomes harder to raise uncertainty without sounding like you are “blocking progress.”

Net effect: more polished motion, weaker detection, and a higher political cost to slow down.

The remedy is not “use less AI.” It is to use AI with better calibration mechanics.

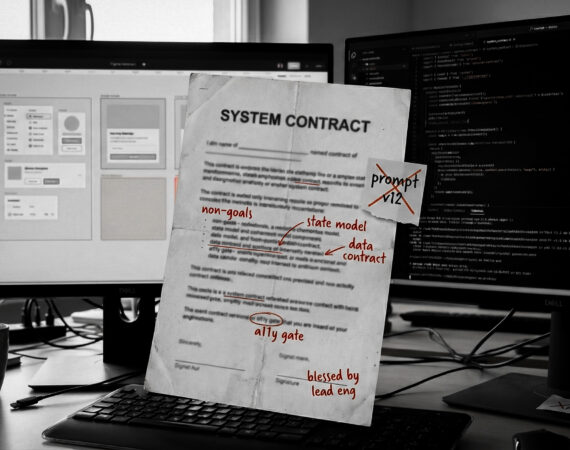

The Decision Brief: a Feynman-style calibration ritual

The Feynman Technique is often summarized as: if you cannot explain something simply, you do not understand it.

As a leadership practice, it becomes a gate:

If the decision cannot be explained plainly, it should not be scaled yet.

Use this one-page Decision Brief for any decision that is expensive, hard to reverse, or culturally disruptive. For reversible, low-cost decisions, skip the brief and record assumptions and confidence in a sentence.

If you are a senior leader who approves decisions more often than you draft them, require this brief as the cover page for proposals.

It also lowers the cost of disagreement because teams can debate assumptions, confidence tags, and test design without debating someone’s status.

- Explain the decision in plain language.

Write it as if you are onboarding a smart new hire. No acronyms. No slogans. No “obviously.” - Name the three assumptions you are betting on.

Not ten. Three. The assumptions that, if wrong, would materially change the decision. - Add a confidence tag, and define what would change your mind.

Write: 60%/80%/95% (or similar).

Then answer: What evidence would move this number down or up?

This works because it forces you to quantify a feeling. Many leaders discover that what they call “certainty” is closer to “optimism under time pressure.” - State the strongest counterargument.

Not a strawman. The argument a credible skeptic would make after doing their homework. - Define the smallest safe test.

Design a test that helps you learn you are wrong cheaply before you learn it expensively.

Concrete example: before redesigning an org-wide process, run a four-week pilot in a single program line. Keep the old process available as a fallback. Define two or three measurable signals (cycle time, rework rate, stakeholder satisfaction). If the signals do not improve, you have earned the right to stop or revise without destabilizing the entire system, and without having to apologize to the whole company.

If you want a lightweight way to run this ritual with your team, I built a FeynmanBoard demo. It walks you through the Decision Brief and generates a quick reality check (steelman counterargument plus a smallest safe test).

How to use AI without letting it drive your confidence

AI is most valuable as a sparring partner. It helps you pressure-test thinking, not “prove” you are right.

The Sparring Partner (Good AI)

- Generate alternative hypotheses you do not want to admit are plausible.

- Surface edge cases and second-order impacts you may be ignoring.

- Propose small experiments and measurable success or failure signals.

- Rewrite your plain-language explanation until it is specific enough to execute.

The Confidence Inflator (Risky AI)

- Asking AI to write a business case for the decision you already selected (for example, “make the strongest case for reorganizing team X,” after the decision is made).

- Replacing primary evidence with summaries (for example, summarizing customer interviews instead of rereading transcripts or listening to clips).

- Copying generic “best practice” operating models into a complex, local system (for example, importing an AI-generated OKR tree without mapping owners, constraints, and dependencies).

- Treating fluency as a proxy for alignment, feasibility, or buy-in (for example, approving a plan because the narrative is clean while the resourcing and tradeoffs remain vague).

Closing thought

AI will continue to get better at sounding right. The leadership edge will increasingly be the ability to detect when “sounds right” is still fragile.

AI can give you language for a decision. The discipline is forcing that language to cash out into assumptions, evidence, and tests.

As AI reshapes how decisions are made and justified, calibration rituals like this may decide which organizations scale wisely versus merely quickly.

Good organizations do not worship confidence. They measure it.

“The first principle is that you must not fool yourself—and you are the easiest person to fool.”

— Richard Feynman

Call to action

Try the Decision Brief on one real decision this week, preferably one you cannot easily undo. If you are in an approval role, ask for it as the first page of the next proposal you review.

If you’d like a simple one-page template to use with your team, tell me in the comments. If you prefer a digital workflow, you can use the FeynmanBoard demo.

Key takeaways

- AI increases fluency; without calibration, fluency can inflate confidence.

- A one-page Decision Brief makes assumptions, confidence, and tests explicit before you scale.

- The smallest safe test is the fastest way to learn without destabilizing the system.