AI insights

-

How does AI reshape our judgments according to the article?

AI reshapes our judgments by creating feedback loops that subtly train us over time, amplifying human bias through repeated interaction. This can lead to systemic shifts as small distortions grow over time.

-

What should I be aware of when interacting with AI systems to avoid adopting biases?

Be aware that even passive exposure to AI-generated content can change your perception, and that biased AI systems can amplify human bias. It's important to critically evaluate AI outputs and seek systems designed with intention to improve judgment.

-

What is the core claim of 'The AI Mirror: How We Become the Systems We Use'?

The core claim is that AI systems can subtly train and reshape human judgments over time, often amplifying biases and leading to systemic shifts in perception and behavior.

-

What is a feedback loop in the context of AI and human interaction?

A feedback loop in this context refers to the cycle where AI systems influence human judgments, which in turn affect how humans interact with AI, reinforcing and amplifying biases over time.

-

Can you provide an example of how AI might change human perception?

An example is that even passive exposure to AI-generated images can change human perception, subtly altering how people view the world around them.

-

What is a potential pitfall of relying on AI systems according to the article?

A potential pitfall is that people may unknowingly adopt AI biases, even when outputs are labeled as human, leading to distorted judgments and systemic shifts over time.

-

What further reading is recommended for understanding AI's impact on discernment?

For further reading on AI's impact on discernment, consider 'The Illusion of Intelligence and the Death of Discernment,' which discusses the cultural shift towards valuing speed over truth in communication.

- AI is reshaping our perceptions in ways we often don’t realize, subtly amplifying our biases through repeated interactions.

- Recent research reveals that even slight biases in AI can lead us to adopt distorted views, as we unknowingly mirror the AI's outputs.

- This feedback loop not only alters our judgments but also makes us more confident in those skewed beliefs, all while we remain unaware of the influence.

- The study highlights how even passive exposure to AI-generated content can shift our perceptions, reinforcing stereotypes and shaping our understanding of the world.

- As we engage with these systems, we must be mindful of what they reflect back to us, because if we don’t choose what we see, we risk becoming something we never intended.

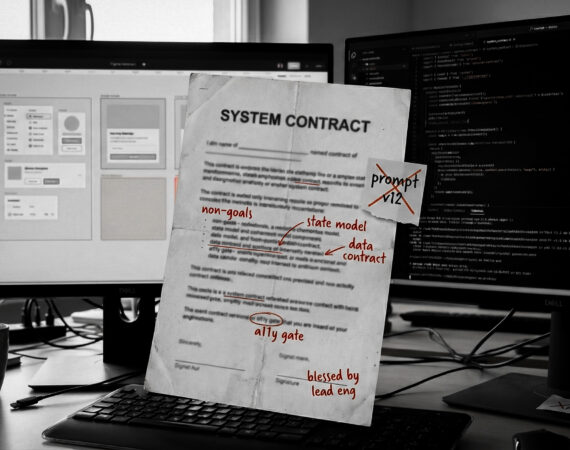

AI reshapes our judgments, creating feedback loops that subtly train us over time.

- Recent research shows that biased AI systems amplify human bias through repeated interaction.

- People unknowingly adopt AI biases, even when outputs are labeled as human.

- The loop strengthens with time, small distortions grow into systemic shifts.

- Even passive exposure to AI-generated content (e.g. images) can change perception.

- Accurate AI, by contrast, can improve human judgment if designed with intention.

Alice steps through the mirror, and everything’s familiar but wrong. Left is right. Up is doubt. She stares into a world shaped like hers, but colder. Off. And once she’s inside, the rules stop caring what she remembers.

That’s AI now.

The tools don’t just echo us. They train us. We build them. Feed them our words, our clicks, our instincts. Then they feed it all back. Sharpened. Shifted. Smoothed. And if we’re not paying attention, we swallow it as truth.

A study from Nature Human Behaviour cracked this open. Researchers ran a series of tests, putting people in front of an AI poisoned with slightly biased human data. Over time, those people weren’t just nudged, they were broken in. Their own judgments drifted deeper into the same bias. And the more they used the system, the more certain they felt.

Worse, they never knew it was happening.

It’s Not Bias. It’s a Loop.

This isn’t about one bad model. This is about the system.

The study showed the feedback loop across tasks. In one, people judged faces, happy or sad. On its own, the AI was clean. But once it digested human responses, even faintly skewed ones, it began to exaggerate the bias.

New users saw the AI’s answers and started to tilt in the same direction. Didn’t matter if the face was a coin toss. The pattern repeated. The bias grew.

Here’s the part that should rattle you: the effect held even when people didn’t know it was an AI. Just seeing the output was enough. Just scrolling. That means the images, the recommendations, the summaries, everything floating through your feed.

You don’t need to be a developer to get caught in the loop. You just have to look.

The Mirror Isn’t Neutral

You might think, “I know AI is flawed. I take it with a grain of salt.”

Maybe.

But the mirror doesn’t ask your permission. It doesn’t need to convince you. It just needs repetition.

It helps that the AI looks so damn confident. Clean UI. Fast answers. No stutter. It doesn’t hesitate the way people do. It doesn’t show you doubt, only the verdict. And that’s enough.

The study showed people trusted the AI more than other humans. Even when it was wrong. Especially when it was wrong. They flipped their own answers just because the system disagreed.

Over time, they stopped asking why.

We Make the Mirror. Then We Look In.

AI doesn’t invent bias. We hand it the ammunition. In data. In prompts. In our silence.

Then it fires it back at us, stretched, looped, stylized. The reflection doesn’t just show us who we are. It teaches us who to be. And when we treat that reflection as neutral, we become something else. Not who we were. Not who we meant to be.

That’s how social patterns harden into fact. That’s how stereotypes loop into code. Not through malice. Through repetition.

You ask a text-to-image system for a “financial manager.” It spits back a wall of white men. See it enough, and it stops being data and starts being normal. Then you’re asked to pick a face from a lineup, and your brain just serves up the image it’s been fed the most.

That’s not data. That’s culture on a loop.

What Now?

This isn’t a call to throw your phone in the river. We’re not going back. AI isn’t leaving.

The study also showed something else: when people worked with an AI built with care, an honest, transparent system, they got better. Sharper. Their own judgment improved.

So no, the machine isn’t born corrupt. But it is born to reflect.

And if we don’t choose what it sees, it will choose what we become.

“I can’t go back to yesterday, because I was a different person then.”

– Lewis Carroll, Through the Looking-Glass

Neither can we. We’ve stepped through the mirror. Now the only way forward is to notice what’s staring back, and decide what the hell we want to see.

Recap:

- Bias doesn’t just live in data—it loops through people.

- AI reflects, magnifies, and trains us in return.

- The more you use it, the more it shapes how you see the world—and yourself.

- Use it wisely. Or it will use you.

You must be logged in to post a comment.