AI insights

-

How does AI influence human judgment?

AI reshapes our judgments by creating feedback loops that amplify human biases through repeated interaction. Even passive exposure to AI-generated content can change perception, leading to systemic shifts over time.

-

What is the main challenge with the rapid dissemination of information today?

The main challenge is that while it's easy to sound smart, it's not difficult to be wrong. This cultural shift prioritizes speed over truth, leading to a decline in discernment.

-

Why might traditional product management roles become obsolete?

AI tools are increasingly capable of translating ideas into products without the need for traditional roles like Product Managers, who act as bridges between vision and execution.

-

What is a significant barrier to organizational change?

A significant barrier to change is the comfort with familiar processes, even when they no longer serve organizational goals. This resistance can derail progress and hinder transformation.

Sources: Change Isn’t the Enemy, Comfort Is -

How can AI improve human judgment?

Accurate AI, when designed with intention, can improve human judgment by providing unbiased and reliable outputs, counteracting the amplification of human biases.

-

What role do frameworks like Kotter’s and Kaizen play in organizational change?

Frameworks like Kotter’s and Kaizen guide real transformation by providing structured approaches to change, helping organizations overcome internal resistance and improve efficiency.

Sources: Change Isn’t the Enemy, Comfort Is -

What is the cultural tipping point mentioned in the context of information dissemination?

The cultural tipping point is the moment society stopped questioning the truth of information and began celebrating the speed at which it can be communicated, often at the expense of accuracy.

- In today's fast-paced digital world, it's never been easier to sound smart, but that doesn't mean we're getting it right.

- An ad urging people to "write an ebook before lunch" highlights a troubling trend: speed and appearance are trumping truth and substance.

- With AI tools making content creation a breeze, the rigorous process of writing, editing, and fact-checking is being sidelined.

- This shortcut culture risks diluting knowledge and undermining trust.

- The absence of editors and critical thought means that looking credible is easier than being credible.

- We need to reintroduce friction into our thinking processes to ensure depth and integrity in our information.

“We’ve made it easy to sound smart.

Too bad we forgot to make it hard to be wrong.”

Scrolling through social media the other day, I stumbled across an ad that stopped me cold. It showed a sticky note slapped onto a screen, scribbled with:

“Write an ebook before lunch!”

Catchy? Sure. Impressive? Maybe. But mostly, it felt like a cultural tipping point, the moment we stopped asking if something was true and started celebrating how fast we could say it.

With over a decade of experience in publishing and newspapers, I’ve seen firsthand what it takes to produce something real. Writing, editing, proofing, arguing over a single sentence, that was the grind. And while I’m no enemy of progress, what we’re calling “progress” today looks a lot more like shortcut culture dressed up in confidence.

Thanks to AI tools, anyone with a Wi-Fi signal and a keyboard can spin up a “best-selling” ebook in a morning. Packaging has never been easier. Publishing has never been faster. And sounding like an expert is practically automatic.

But here’s the problem:

Ease breeds arrogance. The slicker the output, the less we question what went into it.

Why This Matters

We used to think before we published. There were editors, fact-checkers, red pens, and second thoughts. The delay was frustrating, sure, but it served a purpose. It forced us to slow down and ask, Is this right?

Now, the first draft is the final draft. Publishing is a click. Viral phrasing trumps verified facts. Peer review has been replaced by peer reach – whoever shares it first, wins.

We’re not evolving knowledge anymore. We’re diluting it. Drowning it in a sea of AI-written ebooks, autogenerated advice threads, and confident-sounding blog posts that skip the part where anyone checks the math.

And it’s not just content consumers being misled, it’s students and professionals, too. I see it in higher education, where I regularly have to challenge students who rely on oversimplified, AI-generated articles instead of the textbooks. The result? Shallow assumptions and missing context, the very opposite of the deep thinking that higher education is meant to cultivate.

Looking Smart vs. Being Smart

There’s a difference between looking intelligent and being intelligent. Most people know this, deep down. But in a world where aesthetics and speed masquerade as substance, the difference gets harder to detect.

And when intelligent people realize they’ve been fooled? They rarely blame the author. They blame themselves. That’s when trust doesn’t just erode, it combusts.

Because betrayal of trust isn’t just external. It’s internal. And people who feel complicit in their own deception tend to retaliate. Not with disappointment. With outrage. The price of pretending to be wise is paid in interest.

Even in professional environments, I’ve seen capable people shortcut their thinking because AI made it so easy. One colleague I collaborate with understands the challenges of AI deeply, and yet, when presenting product ideas, they defer to AI-generated insights as if they were their own. The result isn’t just awkward. It undermines the integrity of the product. Because when you don’t know why a decision was made, innovation suffers, and trust collapses.

The Disappearance of the Editor

Once upon a time, editors were the immune system of truth. They challenged assumptions. They slowed us down. They made things better, and more often, they stopped bad things from getting out.

Today? The editor’s gone. The proofreader’s gone.

All that’s left is the algorithm and a thousand eager prompts.

And what’s replacing critical thought? Format. Fonts. SEO tags. The performance of authority.

As a result, truth has become optional, and authority has become a look, not a substance. If something sounds smart, we assume it must be. But when everyone’s an author and no one’s an editor, looking credible is easier than being credible.

Democracy of Thought ≠ Equality of Insight

There’s a law in physics: no system can be more than 100% efficient. Friction, heat, loss, these are features of reality, not bugs. And when we try to remove all resistance from thinking, we’re not achieving intelligence, we’re achieving entropy.

We’ve democratized content creation. That’s good. But we’ve also flattened the critical thinking process. That’s dangerous. Not everyone should be able to bypass the mental effort required to understand complexity.

Some gates are there for a reason.

And the more we automate sounding smart, the less incentive we have to be smart. That’s not progress. That’s decay.

Final Thought

“When everyone’s an author and no one’s an editor, truth becomes optional, and authority becomes a look, not a substance.”

But don’t forget this:

People eventually realize when they’ve been fooled. And when they do, they don’t just lose trust. They come back swinging.

Key Takeaways:

- Looking smart has never been easier, but that doesn’t make the information right.

- The editorial layer was a safeguard. Without it, credibility becomes a design choice.

- We need to reintroduce friction into how we create and consume information.

- Critical thinking isn’t elitism, it’s efficiency with a cost. And the cost is worth paying.

- Delegating understanding to AI can lead to shallow thinking and missed innovation.

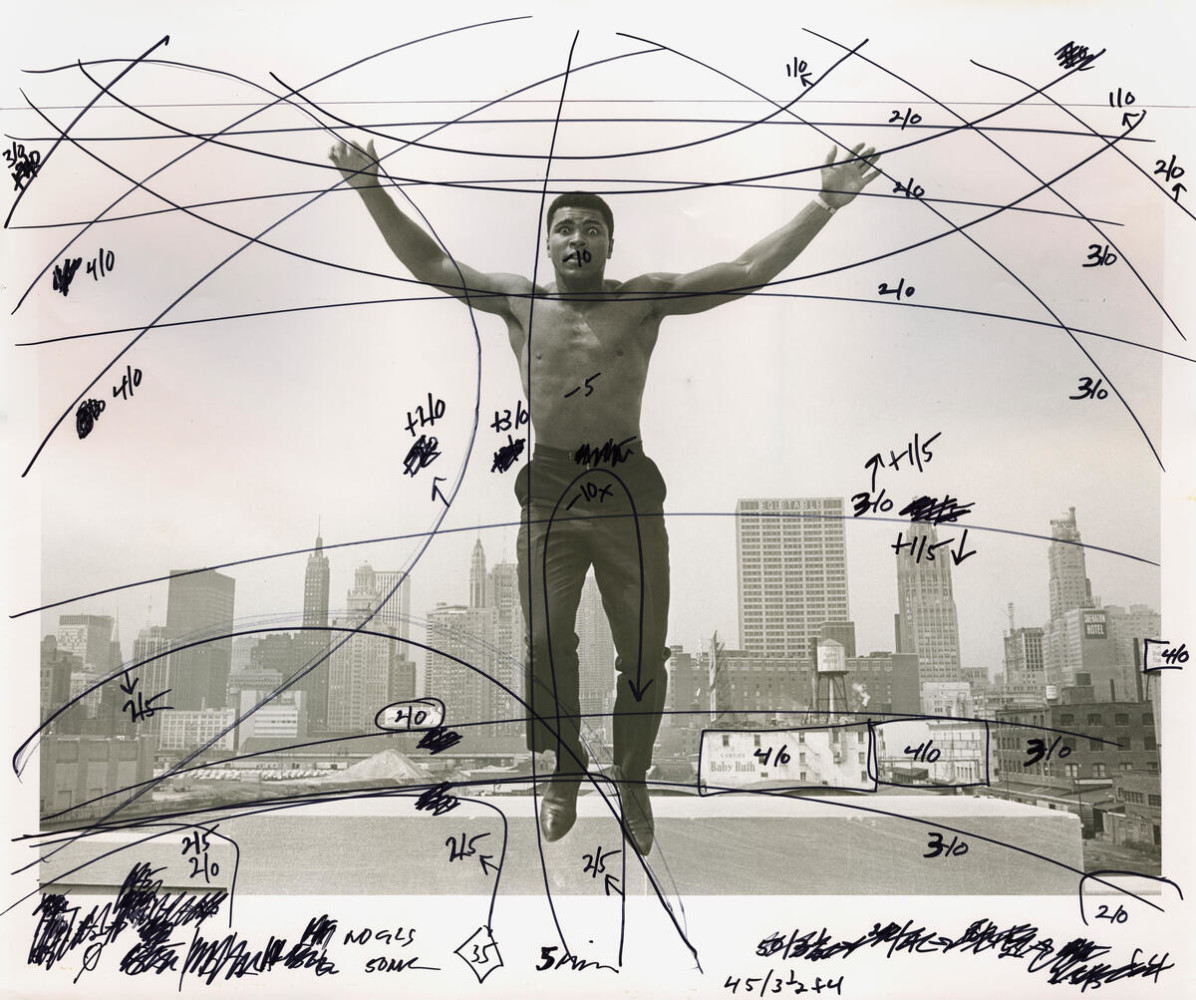

Photo credit: Guide print marked with Pablo Inirio’s notes for darkroom printing. © Thomas Hoepker / Magnum Photos

You must be logged in to post a comment.